Management by measurement in all domains, inevitably leads to side-effects at best and antithetical results at worst.

The problem is a well-known one, and indeed one we have discussed here before: as soon as you try to measure how well people are doing, they will switch to optimising for whatever you’re measuring, rather than putting their best efforts into actually doing good work.

In fact, this phenomenon is so very well known and understood that it’s been given at least three different names by different people:

- Goodhart’s Law is most succinct: “When a measure becomes a target, it ceases to be a good measure.”

- Campbell’s Law is the most explicit: “The more any quantitative social indicator is used for social decision-making, the more subject it will be to corruption pressures and the more apt it will be to distort and corrupt the social processes it is intended to monitor.”

- The Cobra Effect refers to the way that measures taken to improve a situation can directly make it worse.

As I say, this is well known. There’s even a term for it in social theory: reflexivity. And yet we persist in doing idiot things that can only possibly have this result:

- Assessing school-teachers on the improvement their kids show in tests between the start and end of the year (which obviously results in their doing all they can depress the start-of-year tests).

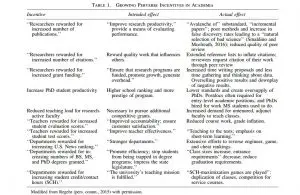

- Assessing researchers by the number of their papers (which can only result in slicing into minimal publishable units).

- Assessing them — heaven help us — on the impact factors of the journals their papers appear in (which feeds the brand-name fetish that is crippling scholarly communication).

- Assessing researchers on whether their experiments are “successful”, i.e. whether they find statistically significant results (which inevitably results in p-hacking and HARKing).

What’s the solution, then?

Leave a Reply